In this post, we will discuss a complex client scenario requiring large data migration from on-premises DCs to global Azure regions. The Intent is to highlight the Issues encountered during such large data migration scenarios and discover the potential solutions.

Data Migration between On-Premises and Cloud

Every Cloud migration journey of any kind Involves migrating data of different sizes as one of the fundamental requirements. This data is either structured or unstructured in various shapes and forms. These could be like the files data residing on a NAS, or database data, or data on Individual devices.

There are 2 primary modes of migrating the data to the Cloud – Online, and Offline.

In the Online mode, you would migrate the data to the Cloud over a secure Internet connection. You would typically use cloud-native or third-party tools (like AzCopy, Robocopy, etc.) to migrate the data.

In the Offline mode, you would use a cloud-native data migration service offered by the respective Cloud vendors. Such cloud-native services provide you custom storage appliances to copy and ship your data to the Cloud vendor DC. Some examples of such Offline Cloud data migration services are Azure Data Box and AWS Snowball.

There are different reasons to consider when choosing between either mode of data migration to be used.

You would typically choose from between the Online/Offline modes based on the following factors:

- Data size/volume to be migrated

- The availability/feasibility of secure connectivity between the source and the Cloud

- The net bandwidth availability/feasibility over the connection between the source and the Cloud

- Expected timelines for the data migration

Your choice on the mode for data migration can be either or both for different requirements within the same project.

In this post, we will be focusing only on the Offline mode for data migration. The Idea is to Illustrate specific Issues associated with the client scenario we would be discussing.

Client Scenario & Requirements

Imagine that there is a global healthcare company based out of the US. It provides local healthcare services across the US, EU, and Japan geographies.

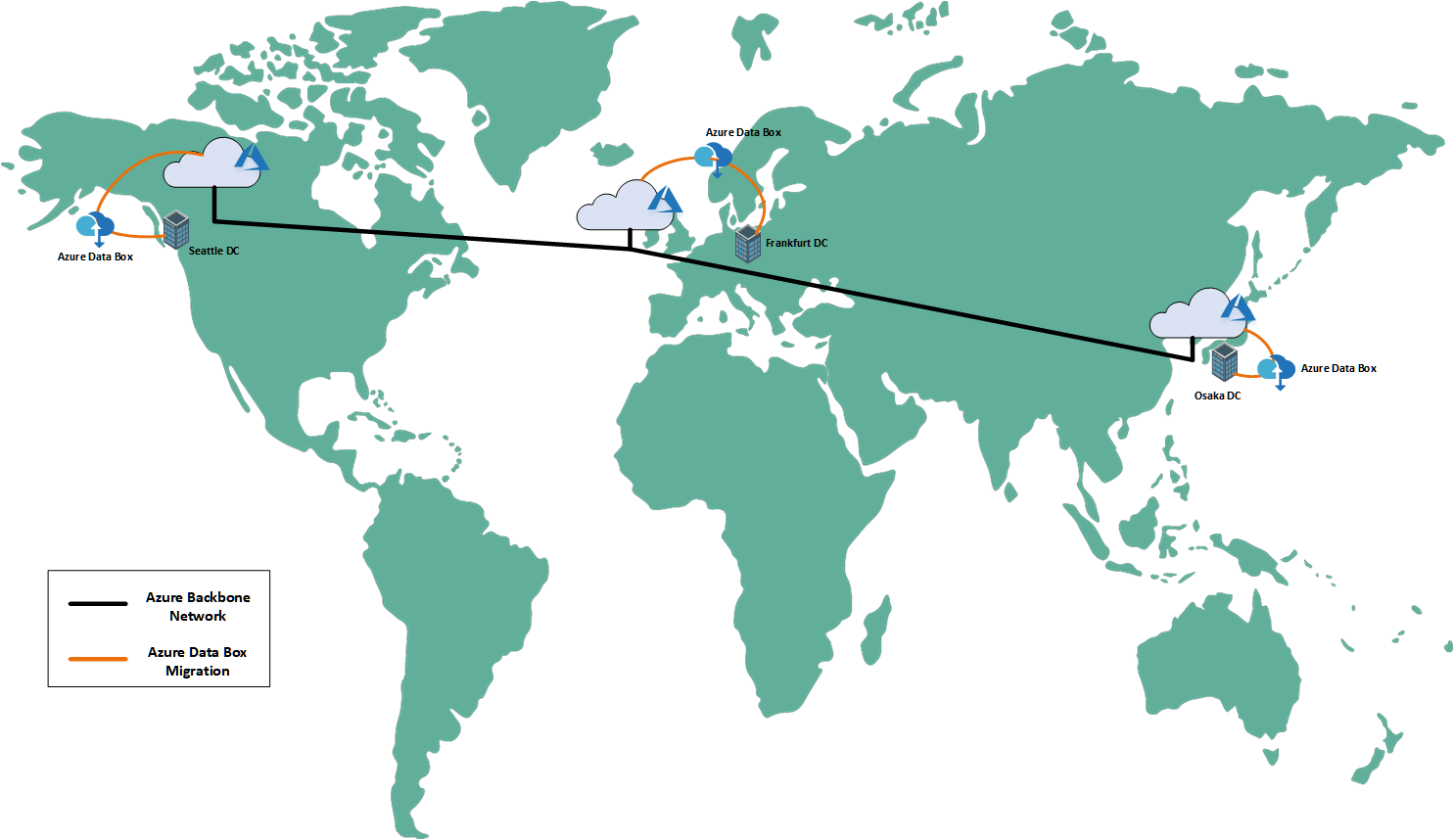

Currently, they have their own on-premises DCs in each geography – Seattle (US), Frankfurt (Germany/EU), and Osaka (Japan).

There is no traditional WAN or SD-WAN connecting these three DCs with each other.

Each client DC hosts a variable number of applications and houses large volumes of structured and unstructured data.

They want to migrate to Azure. They also want to consolidate all their applications and data across their on-premises DCs to the Azure East US region. The primary intent is to decommission the on-premises DCs in Frankfurt and Oslo after the migration is completed.

Furthermore, there is no existing connectivity between these client DCs and Azure. The client also does not want to invest in the same and has stringent timelines for data migration to Azure.

Let us assume that the client currently has the following data volumes across their three DCs:

- Seattle – 15 TB

- Frankfurt – 75 TB

- Osaka – 250 TB

The client wants the above volume of data to be migrated from their respective DCs to the Azure East US region within a period of 6 weeks.

Let’s Ignore the Application and Infrastructure migration aspect of this scenario here. Our focus in the current context is only on the on-premises data migration to Azure.

Data Migration Solution

Given the large size of the data to be migrated to Azure, no direct connectivity to Azure, and stringent timelines, the only option that remains is of considering offline data migration.

The first thought would be to use the Azure Data Box service to migrate the data in the Offline mode.

You will determine which Azure Data Box SKUs and how many units are needed for each region. For this scenario, it would mean that we will need to place an order for the following:

- Seattle – 1 Data Box Disk

- Frankfurt – 1 Data Box

- Osaka – 1 Data Box Heavy

Note: You can find more details about the Azure Data Box here

The next step would be to place an order for the respective Data Box units for each region.

Issue #1 – The First Obstacle

Trying to place the Azure Data Box order for the Frankfurt DC, you will notice that you won’t be able to select any Azure region within Germany.

The Azure Data Box service is not available in Germany (Frankfurt) for any SKU type as of this time. You can verify the same from here.

What do you do now?

Since Germany is in the EU, you can still order the Data Box from another Azure region outside Germany but within the EU. It could be the Azure North Europe region (Ireland) for example. This is the advantage that different countries in the EU have. This will solve your problem of not being able to order and use Azure Data Box for offline data migration to Azure.

You won’t face the same challenge of ordering Azure Data Box for Seattle since it is in the US.

What about ordering the Data Box Heavy for Osaka, Japan? Fortunately, Azure Data Box Heavy is available in the Azure Japan West region (Osaka). However, it is not available in the Azure Japan East region as of this time. The other two SKUs – Data Box Disk and Data Box – are available in both the Azure Japan East and West regions.

If the Azure Data Box service was not available in any Azure region within Japan, like in the case of Germany, you wouldn’t have any other way to order the same.

This solves the Azure Data Box availability problem for Frankfurt. You will now proceed to place the orders for each region.

Next, you would wait for the Azure Data Box units to arrive at the respective client DCs.

Issue #2 – A Bigger Obstacle

Your original thought would be that once you receive the Azure Data Box units at the client DCs, you would locally copy your data into the respective appliances. Thereafter, you would ship them to the Azure East US region for uploading to Azure Storage.

The Azure Data Box cannot be shipped across sovereign shores due to Data Sovereignty compliance restrictions.

After copying your local data to the appliance within a particular country, you cannot ship the appliance to another country, i.e. across sovereign boundaries (except within constructs like the EU).

This also means that once you copy the data to the respective Azure Data Box appliances in Frankfurt and Osaka DCs, you won’t be able to ship them back directly to the Azure East US region.

This is true for both Microsoft Managed Shipping and Customer Managed Shipping options available for the Azure Data Box.

What do you do now?

You need to change your approach of migrating the offline data to the Azure East US region, given the limitation.

You need to plan to ship the respective Azure Data Box appliances to an Azure region where your client DC is also located.

For Frankfurt, you need to choose the option to ship the Azure Data Box to the Azure North Europe region in the current context.

For Osaka, you need to choose the option to ship the Azure Data Box to the Azure Japan West region (Incidentally that is in Osaka itself).

After Microsoft receives the Azure Data Box appliance at the respective Azure regional DCs, they will upload the data to the Azure Storage in that region.

However, the client’s requirement would still remain unfulfilled. The data from both Frankfurt and Osaka DCs would be now residing within Azure storage in the respective Azure regions and not in the Azure East US region.

Moving data across Azure regions leveraging the Azure Backbone

To meet the client requirement of all the data migrated to the Azure East US region, you will need to take another important step.

Now you have the Frankfurt DC data uploaded to Azure Storage in the Azure North Europe region and Osaka DC data uploaded to the Azure Japan West region.

You would now need to undertake two separate data transfer activities across two different pairs of Azure regions.

- One data transfer would be from Azure Storage in the Azure North Europe region to the Azure East US region.

- Another data transfer would be from Azure Storage in the Azure Japan West region to the Azure East US region.

The data transfer method could be as simple as using the AzCopy tool from within Azure to copy the data across Azure regions.

With the above step, you fulfill the client requirement of moving data from their client DCs to the Azure East US region.

You can see the visual representation of the data migration route in the feature Image of this post.

Insight: Data Transfers over the Azure Backbone

The Azure Inter-region data transfers occur over the Azure Backbone network.

The speed of the data transfer over the Azure Backbone network is presumably fast. But Microsoft nowhere discloses any speed/bandwidth available for such transfers. Neither has Microsoft given any concrete or Indicative responses to such queries raised by me in the past.

I have personally done several such Inter-regional data transfers for clients. In my experience, I have witnessed fluctuating speeds anywhere between 700 Mbps to 1.5 Gbps.

Also, I have learned from the experience of some peers on getting speeds between 200-300 Mbps and 2 Gbps.

How Azure Backbone Network allocates speed/bandwidth?

The available bandwidth/speed on the Azure Backbone network varies greatly and is logically dependent on several factors.

One factor would be the distance between the source region and the destination region endpoints over the Azure Backbone. This would account for the negative speed/bandwidth Impact due to distance-based latency. The greater the distance the lesser the bandwidth available for the data transfers.

Another factor would be the net bandwidth available to you over the Azure Backbone network. The total available bandwidth will get shared amongst all the clients operating on the Azure Backbone network. All data transfers happen on the same route. This leads to variable segmentation of the total bandwidth among all clients. This would give you a fluctuating Azure Backbone network speed/bandwidth experience every time you undertake such data transfers.

Yet another factor would be if Microsoft is intentionally throttling the Azure Backbone network bandwidth. In all likelihood, Microsoft would be regulating the same against some pre-defined ceilings/thresholds. They would typically do so to prioritize/reserve bandwidth for critical Internal Azure operations or SLA-bound client operations across Azure regions.

We can hope that Microsoft continues to upgrade its Azure Backbone network Infrastructure with the latest/greatest technical Innovations. This would make more bandwidth consistently available over the Azure Backbone network, helping us tackle such scenarios more effectively.

That is all for today!

Hope you find this post and the associated Information useful.

I would encourage you to post any questions or share your thoughts on this topic in the comments section below.

This is a very good explanation arjun, thanks for writing.

This reference is missed by mistake probably

Note: You can find more details about the Azure Data Box here

“https://azure.microsoft.com/en-in/services/databox/”

Thanks Yunus!

Great learning buddy after a long time. Share your number.